Implementation and Issues

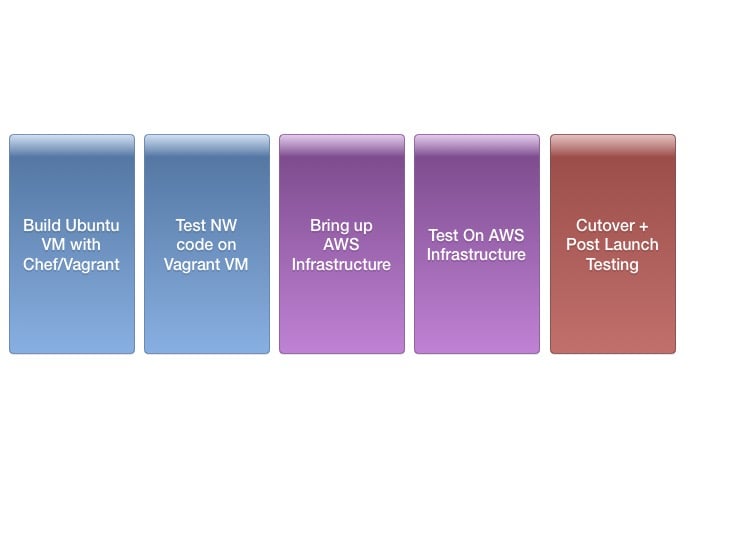

With most of our major design issues decided, we moved into implementation and testing. At a very high level, our plan looked like this:

Cutover planning

From a staffing perspective, when work began on the cutover in late 2014, the DevOps engineering team’s muscle mass and memory were concentrated as individual nodes dedicated to solving for volume of tasks serially. Engineers with specific domain experience launched tools, static pages, and jointly covered site incidents and triage. Project time durations were limited to less than two weeks of effort and involved one engineer solving a very localized set of problems.

To be successful in undertaking a project of the scale, time commitment and complexity of an AWS migration, cutover required a paradigm shift in the team structure, operating process and communication. A defensible planning instrument that reflected the on- the-ground reality by the engineers working on the technical elements while providing management a reasonable indicator of progress and work yet to be accomplished needed to be constructed.

So what did we do to smooth the path to project success? As part of project initiation and planning, the team undertook several key decisions:

- Defined/scoped a project plan with clearly articulated acceptance criteria, identified hard and soft dependencies as well as a level of effort (LOE) estimation, and established ownership based on subject area expertise and slack.

- Got the core team to sit together, a simple undertaking that paid off in reducing coordination costs and building up unit cohesion. The clustering effect acted as a bonding agent for the team that cascaded out to the rest of engineering and NerdWallet as a whole.

- Set up daily standups with full team participation and had other teams members available as needed.

- Developed a detailed launch plan with executive review and broadened the scope of testing to include the entire company.

- Built a self-service model for coordination communication and documentation.

- Ensured that we had management buy-in for everyone on our development team to stop product development and instead devote time to testing in the final days of the cutover.

To resolve the project resourcing problem, the team was reorganized along three major tracks that could proceed mostly in parallel:

- One team of developers had to figure out how we will keep our existing MySQL database and our new instance in AWS up to date before and during the migration.

- One team of developers focused on upgrading our core software (Apache, PHP, Linux packages, etc.) and making sure our site worked with the new infrastructure. We worked off of a snapshot updated every night until the data migration issue was figured out.

- One team of developers and business owners put together a comprehensive manual test plan for our site (as most pieces of the site were tested only at the unit level).

Data migration

We initially set up an RDS instance in AWS to act as our new MySQL master. It was fairly straightforward to spin this instance up and configure it as a MySQL replication slave to our existing instance. The major pain point was simply taking an entire database dump and making sure the binlog was in sync.

However, we ran into a nasty problem with times when we ran consistency checks against the slave. Our existing MySQL databases were set to the U.S./Pacific time zone. RDS databases are always set to UTC, and Amazon does not let you edit this setting. Since we do use queries with MySQL time functions, this broke replication (https://dev.mysql.com/doc/refman/5.7/en/replication-features-timezone.html).

Changing our master server to use UTC instead of Pacific time turned out to be extremely tricky too. We had many database connections that did not explicitly set their time zone when connecting to MySQL, and because of MySQL’s implicit time zone conversions for TIME STAMP fields (https://dev.mysql.com/doc/refman/5.5/en/datetime.html), these clients started reading incorrect time stamps as soon as we tried to change the server’s default time zone. We chose to move to hosting our own MySQL instance instead of using RDS since trying to fix all the application code could add months to the project.

The last mistake we made was around “temporary” replication settings. At some point, we set our MySQL master to use STATEMENT based replication in order to let users run Percona’s pt-table-checksum tool to checksum databases. This was definitely not wise as it led to our slaves getting out of sync. Fortunately we were able to reconcile them after detecting the issue.

New software and infrastructure

The software upgrade process went pretty smoothly, but we did run into a few unanticipated issues that would probably affect anyone else doing the same migration.

- Apache 2.2 -> 2.4. Apache has a good page describing issues you may run into upgrading from 2.2 -> 2.4 (https://httpd.apache.org/docs/2.4/upgrading.html).e ran into many of these. NerdWallet tended to use .htaccess files in many places for url rewriting and access control — going through all of these turned out to be time consuming.

- ELB proxies. Many pieces of code assumed that requests from the internet came directly to them, and relied on Apache to determine if an inbound request was SSL; and to grab the IP address of the user’s browser. Neither of these assumptions were correct, and we had to start looking at the X-Forwarded-Proto and X-Forwarded-For headers. Unfortunately, since we hadn’t always extracted these checks into a set of common library functions, we had to hunt and replace across the codebase to find these bugs. This was trickier to test in our Vagrant environment as well because we could not create virtual copies of an ELB.

- Scope creep! Since we decided to make a large number of changes during the cutover, scope creep became a problem for us. There’s always a little bit more refactoring to be done, a bug to fix, etc., and if you are in the weeds, it seems like the logically correct decision to do the refactor. It becomes very hard to go back to the 1,000 foot view and see how the project is tracking overall. After all, even if each minor refactor adds only a half a day of work, once enough of these sneak in, they lead to huge schedule overruns.

Cutover test plan and execution

So, after we resolved all these issues and had a functioning AWS environment, what did our pre-cutover and post-cutover plan look like?

- Prior to the AWS cutover date we ran our suite of automated tests, and involved the entire development team to do ad-hoc manual testing, against a staging version of theenvironment (this had the same production instances, but everything was pointed at a database we knew we would throw away because of a bunch of bogus writes). This identified many of the bugs above (e.g., time stamps, htaccess rewrite rules not working properly, etc.). This was also a good excuse to document the ad-hoc testing we did.

- We also ran load tests (using access logs from our production traffic and multiplying the volume so we knew we had enough capacity to handle traffic growth) to ensure our new servers performed properly.

- We made sure to come up with a checklist of exact tasks that needed to be done during the cutover itself along with who on the engineering team was responsible for doing them. We ran every step of this checklist possible (aside from switching nerdwallet.com’s DNS records) to ensure we didn’t miss any steps.

- The cutover plan itself: put up a maintenance page on the old nerdwallet.com; promote our AWS replica into a master; change our configuration management scripts so all applications point at the new AWS host; then swap DNS over. We decided it was acceptable to take a brief downtime as long as we scheduled the cutover for off-peakhours.

- After the cutover, we set up a war room with the entire engineering team and our business partners to do sanity checking. Bugs were collected and triaged in a Google doc (for ease of submission), and were fixed over the weekend as reports came in. Overall there were a few minor issues, which was great to see.

Post-cutover learnings

- Project postmortem:

- after replanning, delivered on time (1 week ahead of schedule) with original scope intact

- no significant issues immediately after the cutover

- the project was a prerequisite and critical steppingstone toward eliminating single point of failure

- Cutover day launch statistics:

- 6 months of intensive prep work and structural work to get to launch day

- 51 minutes to cutover from old site to new

- included 8 minutes in which the NerdWallet site was not available and

- 43 minutes of quality testing immediately after the cutover

- 5 P0 bugs detected and fixed in first two hours

- 23 verticals with over 130 test cases tested and signed off within 24 hours

- 1 team across NerdWallet. The people involved took a dedicated, no holds barred attitude to make this project happen (this was the secret sauce for the project).

- 6 month post-cutover statistics:

- more than 6 months after the cutover, reduced site down issues as follows:

- Downtime reduction/month

- AWS’ multiple availability zones let us quickly reduce our downtime by 75% per month

- Home page: 23 to 6 min

- Best Credit Card page: 60 to 8 min

- Credit card tool: 61 to 8 min

Where are we now?

- Log aggregation and a centralized monitoring dashboard for AWS and system metrics certainly become more important as your environment scales. We had always used New Relic for application monitoring, and have since added Datadog and Sumo Logic to our monitoring suite. Datadog and Sumo Logic also have native integration with AWS, which allowed us to easily integrate with CloudWatch and other Amazon services.

- After the cutover we have shipped many new Python and node.js services. AWS has definitely allowed us to deploy new code with agility. We are now up to ~60 nodes in our production environment.

- One of the challenges we want to tackle next is how to keep our Vagrant development environment in sync with AWS. As we roll out more services and more AWS managed services, how do we make sure we can use the same deployment and provisioning processes in both environments whenever possible?

Key takeaways

- Database migration will probably be the hardest part of the cutover if you are a Web 2.0 company. Make sure to start the planning and testing for this very early in your cutover project plan!

- Automate everything wherever possible. The upfront cost is higher, but it will more than pay off in the long run. I’d recommend automation even in the early prototyping phase, otherwise trying to remember what you did and turn it into automation recipes can be error-prone.

- Make sure you are thinking of how any decisions you make can be easily reflected onto your staging and development environments. Making all of your environments as similar as possible will make code deploys much simpler. This is particularly important to think about if you want to take advantage of any AWS managed services.

- Internal teamwork and company all–hands–on–deck approach was essential for success on a project of this magnitude.

Acknowledgements and many thanks:

- The rest of the DevOps team – Jason Leong and Tom Sherman

- All of NerdWallet’s partner teams –– Product, Design and Growth for their assistance testing the site.

from NerdWallet

https://www.nerdwallet.com/blog/engineering/migration-aws-part-2/

No comments:

Post a Comment